After abandoning the web prototype I decided to try out making a prototype using the open source game engine Godot instead. The primary reason for this was that it would allow developing the application once and then deploying it to multiple platforms, while still building a “real” application that could work with files and interact with the OS in a native way.

I had recently tried Godot, building a simple game called Vlobs to learn how to use it and had mostly enjoyed working with the system. Beside the cross-platform support the engine also featured a modular and extensible UI system that would allow me to build the UI components I needed.

One core issue with Godot is that it had no built in support for rendering vector graphics, which of course was an issue since this was going to be a vector graphics editor. However, in Godot 4.1 they introduced the capability of rendering SVG files to bitmap at runtime, using the ThorVG engine. And since I was planning on using the an SVG-like internal format this seemed promising. While this SVG rendering is not as fast enough for rendering complex vector graphics in games I figured it would be fast enough for this use-case since I would only need to actually re-render the SVG data for one layer at a time when it changed.

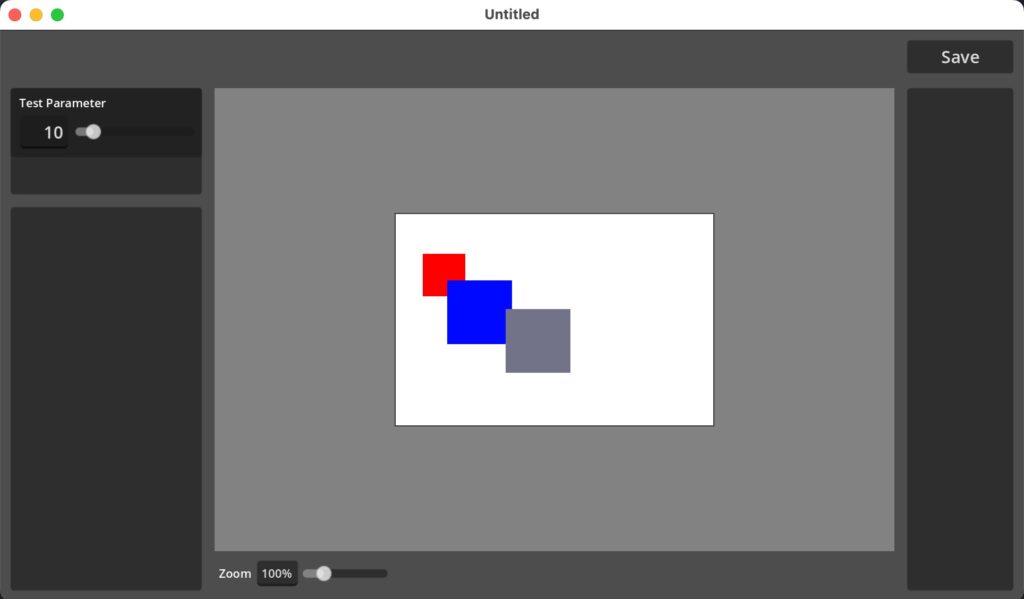

So I started re-working the structures I had developed to fit in the Godot architecture and built a first godot-based test prototype with some basic interface, the ability to generate and render SVG content and save/load files to disk. And eventually I had something that was basic, but promising enough to convince me to keep working on this and try to build a more functional prototype using Godot.

Godot vector graphics rendering

For those specifically interested in the SVG / vector graphics rendering solution I settled for, here’s a bit more of an in-depth explanation:

Being a high performance game engine Godot only renders bitmap (pixel-based) textures to screen using fast GPU-based drawing code. This means we first need to render our vector graphics into a bitmap texture for it to draw. And crucially, doing this is exactly what the ThorVG addition enabled.

To do this is a reasonably performant way I first generate the SVG vector graphics output for each layer separately, since the user will likely only edit one or a couple of layers at a time. This will make the SVG we need to render less complex, and we can stack the layers on top of each other using regular compositing of the rendered textures.

This layer SVG is then sent to a specialized SVGView node, a TextureRect child that in turn manages a separate rendering thread where the SVG code is rendered into an Image that is then converted into Texture. When this process is finished, the thread reports back and the new texture is assigned to the TextureRect, displaying the rendered SVG on screen.

In rendering the svg to an image the SVGView also keeps track of the view scale / zoom used, to render the graphics at the correct size. Together with tracking when the scale changes this means the view will also automatically initiate a redraw when the user zooms in / out.